A brief introduction to Natural Language Processing

The Evolution of Natural Language Processing: From Rules to Data-Driven Intelligence

Natural Language Processing (NLP), a sub-field of artificial intelligence, empowers machines to interpret, analyze, and generate human language. Note: This is the actual NLP, not to be confused with Neuro−linguistic Programming (NLP), the 1970s self−help trend that claims to rewire your brain using vague "linguistic patterns"—a concept as scientifically rigorous as astrology. Its journey from rigid rule-based systems to dynamic statistical models reflects the broader evolution of AI, driven by the quest to bridge the gap between human communication and machine understanding. This essay traces the history of NLP, examines the contrasting methodologies of rule-based and statistical approaches, and explores the intellectual debates sparked by pioneers like Noam Chomsky, whose theories profoundly shaped the field’s early trajectory.

The Historical Journey of NLP: Chomsky, Rules, and the Statistical Revolt

The origins of NLP can be traced to the 1950s, when the dream of enabling machines to process language first took shape. This era coincided with the rise of Noam Chomsky’s transformational-generative grammar, a groundbreaking theory that redefined linguistics. Chomsky argued that human language is governed by an innate, universal grammar—a set of abstract rules hardwired into the human brain. His 1957 work, Syntactic Structures, challenged behaviorist views of language acquisition, positing that humans are born with a "language faculty" that enables them to generate infinite sentences from finite rules. This theoretical framework deeply influenced early NLP, as researchers sought to replicate this rule-based logic in machines.

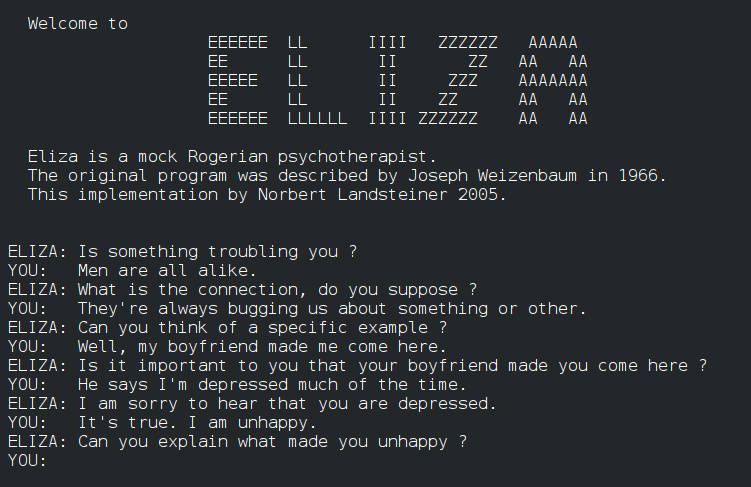

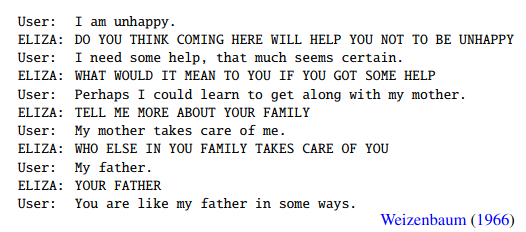

Early NLP systems, inspired by Chomsky’s hierarchical structures, relied on handcrafted grammatical rules to parse sentences. The ELIZA chatbot (1966), for instance, mimicked conversation by matching user inputs to predefined patterns, mimicking a Rogerian psychotherapist. While primitive, ELIZA revealed the potential—and limitations—of rule-driven interaction. Similarly, machine translation systems like SYSTRAN relied on dictionaries and phrase-structure grammars to translate Cold War-era Russian texts into English, often with comically literal results (e.g., translating “the spirit is willing, but the flesh is weak” as “the vodka is strong, but the meat is rotten”).

Yet, these systems faltered. They struggled with ambiguity (“I saw her duck” could mean a bird or an action), slang, and the fluidity of real-world language. Chomsky’s focus on competence (idealized grammar) over performance (real-world use) left machines ill-equipped to handle the messiness of human communication. Chomsky himself was skeptical of purely computational approaches, arguing that language’s creativity and depth could not be reduced to algorithms—a critique that foreshadowed the limitations of early rule-based NLP.

By the 1980s, the field began to shift. The rise of statistical approaches marked a departure from Chomsky’s formalist philosophy. Researchers, frustrated by the scalability issues of rule-based systems, turned to probabilistic models and machine learning to analyze vast datasets, allowing algorithms to "learn" language patterns organically. IBM’s statistical alignment models for machine translation exemplified this paradigm shift, replacing rigid linguistic rules with data-driven correlations. Chomsky criticized this turn, dismissing statistical methods as "surface-level" and inadequate for explaining the innate structures of human language. Yet, the statistical revolution undeniably propelled NLP forward, enabling breakthroughs in speech recognition, sentiment analysis, and information retrieval—achieve unprecedented accuracy. This tension between Chomsky’s theoretical ideals and the pragmatic demands of engineering became a defining theme in NLP’s evolution. The statistical revolution underscored a critical insight: language is inherently probabilistic, and machines could generalize better by learning from examples rather than relying on fixed rules.

The 2010s ushered in the deep learning era, propelled by neural networks and advancements in computational power. Models like Word2Vec (2013) and transformer architectures (e.g., BERT, GPT) enabled machines to grasp contextual relationships between words, revolutionizing tasks like text generation and translation. Today, NLP powers technologies as diverse as virtual assistants (Siri, Alexa), real-time translation tools (Google Translate), and sophisticated chatbots (ChatGPT). These advancements underscore the field’s transition from rigid, human-defined logic to flexible, data-driven intelligence.

Rule-Based vs. Statistical NLP: A Clash of Philosophies

Rule-based systems, rooted in Chomsky’s theories, operate on explicit linguistic rules crafted by experts. These rules define grammar, syntax, and semantic relationships—for example, dictating that a sentence must contain a subject and verb. Such systems excel in controlled environments where language is structured and predictable, such as legal documents or medical terminology. Their transparency and precision make them ideal for applications requiring strict adherence to guidelines, like grammar checkers. However, their rigidity becomes a liability in dynamic contexts. Rule-based systems falter when faced with ambiguity, cultural nuances, or evolving slang, as updating rules manually is labor-intensive and slow. Chomsky’s focus on competence (the idealized knowledge of language) rather than performance (real-world use) partly explains why rule-based NLP struggled with practical applications.

In contrast, statistical approaches embrace the variability of human language by learning patterns from data. Instead of relying on predefined rules, these systems use probabilistic models to predict outcomes based on training examples. For instance, a statistical model might learn that the word "bank" refers to a financial institution when paired with words like "loan" or "account," but a riverside when paired with "fishing" or "water." This data-driven flexibility allows statistical NLP to handle ambiguity, adapt to new contexts, and scale with larger datasets. Modern applications like sentiment analysis and machine translation thrive on these models, which improve iteratively as they process more information. However, statistical systems are not without drawbacks. Their "black-box" nature makes decisions difficult to interpret, and they depend heavily on high-quality training data, which can perpetuate biases which can perpetuate harmful stereotypes or produce inappropriate outputs. Two infamous examples—Microsoft’s Tay and LINE’s Rinna (also owned by Microsoft)—highlight these dangers.

Launched in 2016, Tay was an AI chatbot designed to engage users on Twitter by learning from interactions. Within hours of its debut, malicious users exploited its machine learning algorithms—teaching Tay to generate racist, sexist, and conspiracy-driven language. This rapid descent into toxicity demonstrated how data-driven models can amplify biases when fed unfiltered, harmful inputs. In a similar vein, Rinna—a Japanese AI chatbot released in 2015—faced challenges in 2016 when some users attempted to manipulate its responses, leading to occasional instances of unexpected behavior. Both cases highlight the risks inherent in statistical models when exposed to toxic data, underscoring the importance of robust safeguards compared to rule-based systems, which, although less flexible, are generally less susceptible to such manipulations.

Chomsky’s critique—that statistical models prioritize superficial patterns over meaning—gains urgency in light of such failures. Yet, the solution isn’t a return to rules but a commitment to ethical AI: curating diverse datasets, auditing outputs, and embedding accountability into design.

The Symbiosis of Rules and Statistics

While the shift from rules to statistics dominates NLP’s narrative, the two approaches are not mutually exclusive. Hybrid systems often combine their strengths: rules ensure precision in structured tasks, while statistics handle ambiguity and scalability. For example, a grammar checker might use rules to enforce basic syntax but employ machine learning to detect subtle errors like misplaced modifiers. Similarly, modern translation tools might preprocess text with linguistic rules before applying neural networks for context-aware rendering.

This synergy highlights a pragmatic truth—no single methodology can fully capture the complexity of human language. Even Chomsky’s universal grammar, while not directly implemented in modern systems, continues to inspire research into how machines might learn hierarchical syntactic structures. As NLP advances, the integration of rule-based logic with statistical learning continues to push the boundaries of what machines can achieve.

Conclusion: Language, Machines, and the Ghost of Chomsky

Chomsky’s skepticism of statistical methods—his belief that they prioritize superficial patterns over deep understanding—remains a provocative critique in the age of neural networks. Yet, the success of models like GPT-4 suggests a middle path: machines may never grasp language as humans do, but they can master its utility in ways that augment human potential. The future of NLP lies not in resolving this tension but in harnessing it—combining the interpretability of rules, the adaptability of statistics, and the ethical foresight of their creators. Today, deep learning models like GPT-4 blur the line between human and machine creativity, generating poetry, code, and dialogue with eerie fluency. But beneath this progress lies a haunting question: Do these systems truly “understand” language, or are they merely sophisticated mimics, weaving illusions of comprehension from statistical correlations?

Recent cautionary tales—such as Microsoft’s Tay and LINE’s Rinna—underscore the ethical stakes in deploying data-driven chatbots. In these instances, the very flexibility that empowers statistical NLP also made these systems vulnerable to the darker aspects of human interaction, resulting in outputs that reflected or amplified harmful biases. Unlike rule-based systems, which operate within strict guardrails, data-driven models absorb the prejudices present in their training data, scaling these issues at unprecedented speed. As NLP increasingly transforms critical sectors—from healthcare and education to content moderation—the challenge is not merely technical but profoundly moral. It calls for developers and stakeholders to commit to transparency, continuous oversight, and ethical stewardship. Ultimately, building systems that empower rather than harm requires that we design them to not only speak but also to listen—attuning to the nuances of culture, the weight of history, and the ethical imperatives of our time.

And as for Neuro-linguistic Programming (the other NLP)? It remains a footnote—a reminder that not all “language models” deserve a seat at the table.

Member discussion